When you browse SharePoint SDK , you come across some classes such as ContentByQueryWebPart which is marked as non CLS-compliant classes. This class or any derived type may not be interoperable with a wide range of .Net supported programming languages.Something that you really need to consider when using this calss or driving your custom types off of it. Generally, C# complier does not check for CLS compliance of your code and you have to explicitly instruct it to do so.

This class for whatever reason is not decorated to be CLS-compliant and it should be used with extra caution in regards to interoperability.

[CLSCompliant(false)]

public class ContentByQueryWebPart : CmsDataFormWebPart

{

…..

}

I have used both types of external drives (USB2 and 1394 connections) for my SharePoint images and the USB2 drives always perform better than firewire drives

for me. 1394 drives sometimes give me headaches after restart and I basically have to unplug and plug it back in ,so keep this in mind as the first tip.

SharePoint is a resource intensive product and on the top of that a virtualized SQL server adds too much overhead so you can never expect

the same performance and transfer rate you get with IDE/SCSI.None of the existing external hard drives are meant to give you such a performance.

If you are performing backups as well then consider getting two drives, one dedicated to your sharepoint images and the other one for storing your backups.

I will never store backups side-by-side my images.A large external hard drive ( 250GB or greater) is necessary.

Remember, Most of external hard drives come pre-formatted so always check to see what file format is used at first place before you start creating your demo or non-demo images.

By creating a VHDs on FAT32 ,Virtual PC splits it to multiple files which may not be what you want so you have to take extra steps to merge them all.

At the end and for Vista Users in particular, always make sure that the external hard drive you are about to purchase has no compatibility issue with your OS.

I know some people who had problem hooking up their Western Digital 500 GB External HD and they are still stuck!

1) Definitely make sure that you call update method when you are done adding property bags, otherwise your changes won’t be applied.

curWeb = SPContext.Current.Web;

curWeb.Properties.Add(“MyProperty”, DateTime.Now.ToString());

curWeb.Properties.Update();

2) In some cases I have found that SPWeb.Properties.Remove(“MyProperty”) does not work even if you call update method after. In those case when I set the property value to null , it will be removed from the collection and SPWeb.Properties.ConstainsKey(“MyProperty”) does not return “True” which means it is not there.

curWeb = SPContext.Current.Web;

curWeb.Properties[“MyProperty”]= null;

curWeb.Properties.Update();

To find out if a property bag exists or not simply check this:

if (curWeb.Properties[“MyProperty”] != null)

{

//Exists

}

else

{

// Does not Exist

}

Categories: Uncategorized Tags:

Situation: Upgrading a large site collection from SPS 2003 to MOSS 2007 for a completely virtualized medium server farm environment. In brief, when you have a large site collection, the data copy for gradual upgrade may time out.

Upgrade log file:[SPSqlCommandFactory] [DEBUG] [10/20/2006 6:26:00 AM]: SqlCommand.CommandTimeout = 23635

[SPSiteCollectionCopier] [DEBUG] [10/20/2006 6:26:00 AM]: Copying table AllDocStreams.

[SPSiteCollectionCopier] [ERROR] [10/20/2006 1:00:00 PM]: Failed copying site SPSite Url=http://devhmoss.

[SPSiteCollectionCopier] [ERROR] [10/20/2006 1:00:00 PM]: Timeout expired. The timeout period elapsed prior to completion of the operation or the server is not responding.

[SPSiteCollectionCopier] [ERROR] [10/20/2006 1:00:00 PM]: at System.Data.SqlClient.SqlInternalConnection.OnError(SqlException exception, Boolean breakConnection)

at System.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj)

at System.Data.SqlClient.TdsParserStateObject.ReadSniError(TdsParserStateObject stateObj, UInt32 error)

at System.Data.SqlClient.TdsParserStateObject.ReadSni(DbAsyncResult asyncResult, TdsParserStateObject stateObj)

at System.Data.SqlClient.TdsParserStateObject.ReadPacket(Int32 bytesExpected)

at System.Data.SqlClient.TdsParserStateObject.ReadBuffer()

at System.Data.SqlClient.TdsParserStateObject.ReadByte()

at System.Data.SqlClient.TdsParser.Run(RunBehavior runBehavior, SqlCommand cmdHandler, SqlDataReader dataStream, BulkCopySimpleResultSet bulkCopyHandler, TdsParserStateObject stateObj)

at System.Data.SqlClient.SqlCommand.RunExecuteNonQueryTds(String methodName, Boolean async)

at System.Data.SqlClient.SqlCommand.InternalExecuteNonQuery(DbAsyncResult result, String methodName, Boolean sendToPipe)

at System.Data.SqlClient.SqlCommand.ExecuteNonQuery()

at Microsoft.SharePoint.Utilities.SqlSession.ExecuteNonQuery(SqlCommand command)

at Microsoft.SharePoint.Upgrade.SPSiteCollectionMigrator.SPBaseSiteCollectionCopier.Copy()

[SPManager] [ERROR] [10/20/2006 1:30:00 PM]: Migrate [SPMigratableSiteCollection Parent=SPManager] failed.

[SPManager] [ERROR] [10/20/2006 1:30:00 PM]: This SqlTransaction has completed; it is no longer usable.

[SPManager] [ERROR] [10/20/2006 1:30:00 PM]: at System.Data.SqlClient.SqlTransaction.ZombieCheck()

at System.Data.SqlClient.SqlTransaction.Rollback(String transactionName)

at Microsoft.SharePoint.Utilities.TransactionalSqlSession.Rollback()

at Microsoft.SharePoint.Upgrade.SPSiteCollectionMigrator.SPBaseSiteCollectionCopier.Copy()

at Microsoft.SharePoint.Upgrade.SPSiteCollectionMigrator.Migrate()

at Microsoft.SharePoint.Upgrade.SPManager.Migrate(Object o, Boolean bRecurse)

[SPManager] [DEBUG] [10/20/2006 1:30:00 PM]: Elapsed time migrating [SPMigratableSiteCollection Parent=SPManager]: 21:16:28.3861356.

[SPManager] [INFO] [10/20/2006 1:30:00 PM]: Gradual Upgrade session finishes. root object = SPMigratableSiteCollection Parent=SPManager, recursive = True. 2 errors and 0 warnings encountered.

[SPManager] [DEBUG] [10/20/2006 1:30:01 PM]: Removing exclusive upgrade regkey by setting the mode to none

Events on database server :

- Autogrow of file ‘DevMoss_SITE_Pair_log’ in database ‘DevMoss_SITE_Pair’ cancelled or timed out after 4062 ms. Use ALTER DATABASE to set a smaller FILEGROWTH or to set a new size.

OR

- Autogrow of file ‘WSSUP_Temp_cda0b018-aa08-4abe-b752-418c62861754_log’ in database ‘WSSUP_Temp_cda0b018-aa08-4abe-b752-418c62861754’ took 83109 milliseconds. Consider using ALTER DATABASE to set a smaller FILEGROWTH for this file.

Actions taken: Pregrowing transaction files in pair and temp databases as stated here did not help, neither setting a smaller growth rate to prevent a time-out during the autogrow operation on database server (because it is virtualized and expected to be slow) as described in this KB article .We also tried bumping up the resources on all servers, again no luck!!!

Cause: Due to the inaccurate nature of setting throughput on every upgrade action, and the unpredictability of hardware performance in real time, the timeout computed during upgrade process could sometime break a legit SQL query that is just running a little longer than usual.

Solution: gradual upgrade is not your choice 🙂 Use DB migration!!!!

Categories: Uncategorized Tags:

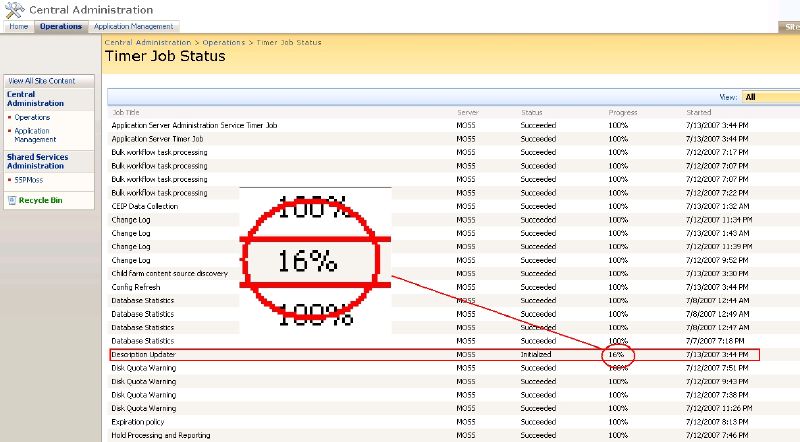

The following snippet demonstrates how to implement a timer job and update its progress using SPJobDefinition.UpdateProgress. The example provided below is just to show you how to provision a timer job and update its progress and may not relate well to real-world scenarios.

using System;

using System.Collections.Generic;

using System.Text;

using Microsoft.SharePoint;

using Microsoft.SharePoint.Navigation;

using Microsoft.SharePoint.Administration;

using System.Web.Configuration;

using System.Configuration;

public class AutomatcSiteDescUpdater : SPJobDefinition

{

public AutomatcSiteDescUpdater()

: base()

{

}

public AutomatcSiteDescUpdater(string jobName, SPService service, SPServer server, SPJobLockType targetType)

: base(jobName, service, server, targetType)

{

}

public AutomatcSiteDescUpdater(string jobName, SPWebApplication webApplication)

: base(jobName, webApplication, null, SPJobLockType.ContentDatabase)

{

this.Title = “Description Updater”;

}

public override void Execute(Guid contentDbId)

{

SPWebApplication webApplication = this.Parent as SPWebApplication;

SPSiteCollection siteCol = webApplication.Sites;

int siteColCount = siteCol.Count; //In my example (also shown in the picture below) there were 21 site collections.

for (int i = 0; i < siteColCount; i++)

{

SPWeb rootWeb = site.RootWeb;

rootWeb.Description = “Description of this web was last updated on ” + DateTime.Now.ToString();

rootWeb.Update();

//Update Progress Bar

this.UpdateProgress((int)(i * 100 / siteColCount));

//Dispose rootWeb

if (rootWeb != null)

{

try { rootWeb.Dispose(); }

catch { }

}

}

}

Progress gets updated as you refresh the page

Categories: Uncategorized Tags: